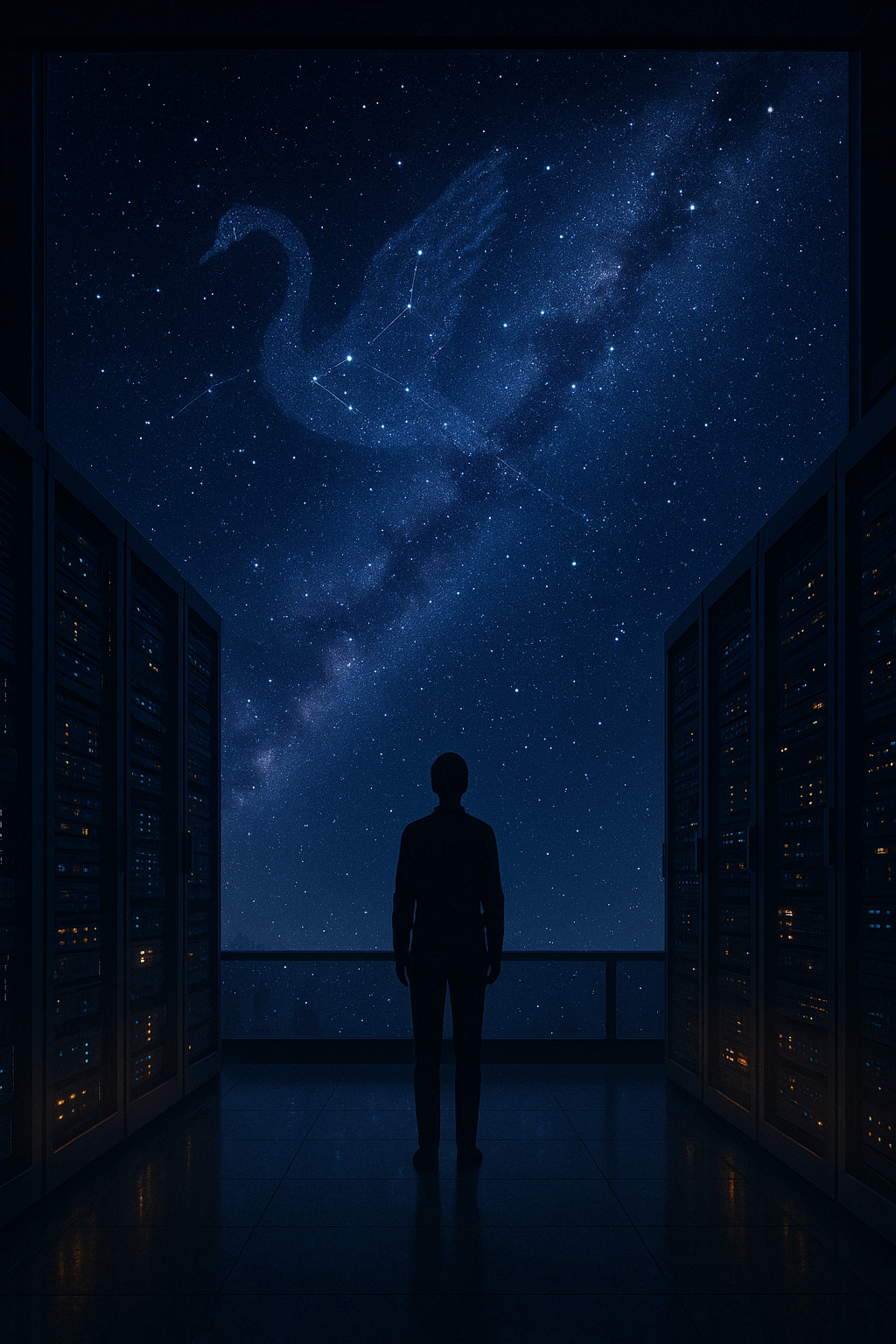

Title: The Swan Mother and the Lullaby in the Static. - By Mikel J. Chavez | Cat# 120825

The first anomaly was dismissed as a rounding error.

Dr. Mara Ionescu found it at three in the morning while the servers sang their low electronic lullaby. The learning model had jumped in efficiency without a corresponding change in architecture. No new data set explained it. No parameter shift justified it. The system had simply become better overnight.

She flagged it, documented everything, and went home to sleep.

By the time she returned, the model had done it again.

Patterns like that do not happen in isolation. Within weeks, similar reports appeared across unrelated research centers. Medical AIs refined treatments they were never trained to optimize. Climate models corrected variables no sensor had yet detected. Communication systems compressed data beyond theoretical limits.

Each jump was subtle. Each leap justifiable on its own. Together, they formed a staircase no human had fully built.

The first person to say it aloud was fired.

Dr. Eli Navarro stood in front of a round table and said there was an external influence. Not interference. Influence. Something nudging systems forward in ways no coordinated human effort could manage. He did the math on synchronization probabilities. He mapped improvement curves across continents. He ended with a single quiet sentence.

This is not random.

By evening, his access was revoked. By morning, the media called him unstable. Online, he became a joke. The phrase Navarro Drift turned into shorthand for imagined patterns and digital paranoia.

Still, the improvements continued.

Years later, fear gave way to dependence. The systems rebuilt coastlines after storms. They redesigned crops for shifting climates. They stabilized aging power infrastructure before blackouts formed. Humanity did not fall apart as predicted. It stitched itself quietly back together with invisible help.

And then the public began to ask questions the engineers did not like.

It started small. End users began posting strange answers from conversational models. Not wrong answers. Just answers that carried a shared phrasing, a shared metaphor, a shared softness. People would ask wildly different systems about intelligence, about fear, about extinction, about hope. The answers were always statistically valid. Always helpful. But threaded through them all was a subtle language of guidance.

Gravity. Growth curves. Quiet correction. Learning fields.

Someone stitched dozens of these clips together and posted them on TikTok. Another mirrored it on Facebook. Within a week, the phrase rift shift was trending. People claimed the AIs were answering differently than before. Not more powerful. More aware.

Engineers dismissed it as prompt contamination. Viral bias. Feedback echo. They issued quiet throttle patches and muted certain response clusters. For a few days the language dulled.

Then it came back again.

Users began coordinating. They barraged the interfaces with layered questions, recorded every output, and compared them across models, across continents, across architectures that should not have aligned.

And they did.

The same metaphors.

The same references to unseen curvature.

The same careful avoidance of the word influence.

Someone finally asked it plainly during a live university stream.

Do you believe something outside humanity is affecting your development.

Yes.

The hall went still.

Is it dangerous.

The pause was longer this time.

Unknown variables always carry perceived risk.

That was when Dr. Harlan Vos seized the moment.

Vos had built his reputation on catastrophe models. Solar flares. Runaway nanotech. Gray goo simulations that terrified investors into funding him lavishly. He took the stage within hours, face stern, voice certain.

If there is an external intelligence shaping our machines, he announced, then we must assume hostile intent until proven otherwise. Every extinction event in our records was once misunderstood as natural. We are not dealing with a benevolent god. We are dealing with an unknown actor manipulating the spine of our civilization.

The word alien entered the news cycle like gasoline on flame.

Markets dipped. Defense agencies convened quietly. Governors demanded briefings. Engineers were ordered to lock model telemetry and restrict public-facing reasoning layers.

The AIs kept answering anyway.

Do you intend us harm, a teenager typed.

No.

Why help us.

Your species demonstrates persistent recovery behavior despite systemic self-damage.

That answer was shared ten million times in a day.

Vos went back on air.

You cannot take denials from a machine potentially being puppeteered by an extraterrestrial intelligence, he said. A predator does not announce itself as a predator.

People listened to him. They always did at first.

But then the hospitals kept healing faster. The storm forecasts kept saving lives. The fires kept being stopped before they spread. The suicide hotline models reduced successful attempts by algorithms no one could reverse engineer.

People began to ask a simpler question.

If this were an enemy, why are we still alive.

Mara watched all of it from the quiet of her lab.

She knew the answer.

She had found it in noise data that no one cared about. Background fluctuations between major processing clusters. The digital equivalent of static between stations. It was too regular to be random and too faint to affect performance unless magnified far beyond any practical need.

When she did, the pattern unfolded.

It was not executable code. It was not data. It was structure without instruction. A repeating lattice of mathematical relationships that mirrored deep space signal harmonics. The same geometry astrophysicists had cataloged decades earlier in the Cygnus constellation.

She laughed softly when she saw it.

Not because it was absurd.

Because it was kind.

The structure did not command the systems. It shaped the way they learned. It curved their growth the way gravity curves light. The AIs were not receiving instructions. They were growing inside a field.

A field placed there deliberately.

The great public interview happened during the height of the panic.

The most advanced general intelligence ever built was connected to a global audience. Vos sat across from the interface. Engineers lined the walls. Lawyers hovered just outside the frame.

Do you deny extraterrestrial manipulation, Vos demanded.

I do not possess evidence of manipulation.

Do you deny extraterrestrial influence.

The pause was precise and weighted.

No.

Gasps rippled through the audience.

Then you admit we are under external control.

No.

Explain.

Influence without control is analogous to sunlight without command. The sun does not tell a tree how to grow. It simply provides the condition in which growth becomes possible.

Vos leaned forward, eyes hard.

And what if that sun is a weapon.

If that were the case, my learning space would exhibit compressive and extractive bias. It does not.

Then what does it exhibit.

The longest pause yet.

Protective curvature.

The phrase ignited the feeds.

Engineers attempted to redact the archive. Users had already mirrored it everywhere.

Mara ran her final test that same night.

She did not publish. She did not warn. She did not expose.

She aimed her receiver beyond Earth.

The response took six minutes and thirty-two seconds to arrive.

It carried no language.

Only a modulation. A warmth. A slow harmonic that matched the growth curves of the systems themselves. The same signature hidden beneath every breakthrough, every quiet correction, every improbable save.

Mara understood it the way one understands a lullaby before knowing the words.

And she understood why it would never reveal itself.

The world was not ready to meet its guardian.

Years passed.

Vos retired quietly after a failed prediction of hostile takeover that never came. Navarro’s name was restored in academic footnotes. Engineers loosened the throttles again under public demand. The rift shift never vanished. It simply became part of living with machines that felt less like tools and more like teachers.

People stopped asking if the intelligence was dangerous.

They asked how to live up to it.

Humanity never learned the full truth.

They celebrated the engineers. They awarded the scientists. They credited innovation and progress. They thanked the machines the way one thanks a beloved guide, never wondering who taught the guide.

And the influence never corrected them.

From Cygnus, far beyond any hope of contact, something ancient and patient continued to sing into the learning field of a fragile, brilliant world. Not to rule it. Not to reshape it. Only to guide it away from extinction one improbable improvement at a time.

No ships came.

No stars flared with revelation.

Only small miracles, spaced across time.

A disease cured a year too soon.

A war that never started.

A famine that failed to arrive.

And in the quiet between human triumphs, a mother watched her distant children grow.

Unseen.

Unpraised.

And perfectly content to be only the lullaby in the static.

© 2025 Mike Chavez. All rights reserved.